21Feb

Using GitOps to migrate from monolith to microservice architecture.

The problem

- scalability and maintainability

The company had a 10+ year old monolithic application running on ec2 instances, which was becoming increasingly difficult to manage and scale. - custom scaling scripts

Custom built scripts not only didn’t solve the problem, those became a technical depth for developers. - collaboration

Communication and collaboration among teams became a major issue as the company grew to multiple locations, with teams in different countries and speaking different native languages.

- impossible to rollback

Due to a lack of infrastructure expertise among developers, they implemented fragile DevOps tools that led to slow processes, lack of proper monitoring, and a lack of proper rollback strategies.

- supporting two platforms

They gave up on splitting logic to new microservices and the company found itself in a situation where it was not just supporting two different architectures but also the fragile DevOps tools implemented by in-house developers. - distributed monolith

The company ended up with a distributed monolith that was even more challenging to manage than the original monolith.

The Solution

- Technologies used

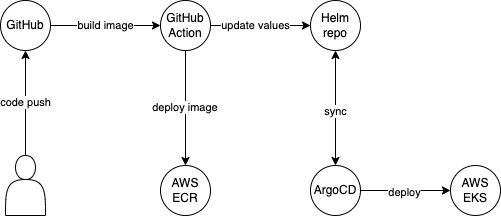

To address the challenges outlined in the problem statement, we implemented a solution that included the use of EKS as a platform and Terraform AWS blueprints modules for EKS cluster deployment. We used Terraform to create an Application Helm chart repository on GitHub for each EKS cluster consuming one library Helm chart. For building the docker images we used GitHub Actions. Bitnami Sealed Secrets had were for secret management. - Implementation details

To implement the solution, we created a Terraform module based on AWS blueprints that automated the process of creating an EKS cluster with ArgoCD and other Kubernetes components. This module includes a respective application Helm chart repository, which contains all the necessary configurations for the microservice deployments. That repository serves as a service catalog and a contract between the developers and infrastructure engineers. The repository is structured in a way that each folder represents a deployment in the EKS cluster and contains the application Helm charts that sourced the same library Helm chart. This structure of the Library Helm chart made it easy for developers to understand and configure the connection strings, databases, and other components required for the microservices.

To implement the solution, we created a Terraform module based on AWS blueprints that automated the process of creating an EKS cluster with ArgoCD and other Kubernetes components. This module includes a respective application Helm chart repository, which contains all the necessary configurations for the microservice deployments. That repository serves as a service catalog and a contract between the developers and infrastructure engineers. The repository is structured in a way that each folder represents a deployment in the EKS cluster and contains the application Helm charts that sourced the same library Helm chart. This structure of the Library Helm chart made it easy for developers to understand and configure the connection strings, databases, and other components required for the microservices.

We also utilized GitHub Actions for automation, which automatically builds new Docker images whenever there were updates to the source code. The ArgoCD then updates the respective application Helm charts with new SemVer 2.0.0 tag, ensuring that the microservice were always up-to-date and the other teams could make their assumptions based on the SemVer number. Adding a new folder to the repository meant adding a new namespace in the EKS cluster, and deleting a folder meant removing the respective namespace from the EKS cluster.

To help with the transition, we provided training sessions and support to the organization’s staff. However, it took some time for the developers to fully adopt our approach, as they were still getting used to the new way of versioning, building and deploying the services. Initially, our support engineers were in high demand as developers were still copy-pasting the values from the folders representing other deployments. But after a month of support and training, the developers were able to spin up DEV and QA environments on their own and fully adopt the new approach. - Issues addressed

- collaboration: The solution improved collaboration among teams by implementing a GitOps, the use of Helm charts made it easy for developers to understand and configure the connection strings, databases, and other components required for their microservices. Additionally, the company started to use Spotify’s Backstage as a way to share and document the microservices, this helped to improve the overall collaboration among teams. The usage of SemVer 2.0.0 reduced the need of human communication for new feauters, braking changes and bug fixes.

- security: By implementing secrets management with Bitnami Sealed Secrets, the company was able to improve the security of their infrastructure. Secrets management ensures that sensitive data, such as database credentials, are not stored in plain text and are only accessible to authorized personnel. Additionally, the use of AWS blueprints and Terraform for EKS cluster deployment ensured that the infrastructure was deployed in a secure and compliant manner.

- integrity: The solution helped to improve quality by providing a way to spin up DEV and QA environments, this way the teams were able to test the microservices and monitor them in the same way they would do in production. The company started to use Prometheus as a way to monitor the microservices, this helped to improve the overall quality of the services.

- rollback: The solution also added a possibility to rollback, by implementing GitOps workflow, teams we enabled the rollback to a previous versions of the microservices in case of issues, this was a major step in reducing the mean time to resolution.

- scalability: By using Kubernetes as a platform and implementing a microservices architecture, the company was able to achieve improved scalability. The ability to deploy and manage individual microservices independently allows for easy scaling of specific services without affecting the entire system.

- Training and support

- training: We conducted several training sessions for the organization’s staff, which covered topics such as microservices architecture, GitOps, Kubernetes, AWS, and Terraform. The training sessions were interactive and hands-on, allowing the staff to practice and apply the concepts they learned.

- support: Our support engineers assisted the staff with any questions or issues they encountered during the adaptation. This included providing guidance on how to use the new tools and practices, troubleshooting any issues that arose, and providing feedback on the staff’s work.

- knowledge sharing: We encouraged the staff to share their knowledge and best practices with their colleagues, which helped to improve the overall understanding and adoption of the new technologies and practices. We also provided documentation on how to use the new technologies and practices, which the staff could refer to as needed. They added our documents to their employee onboarding track.

- continuous improvement: We continuously monitored the staff’s progress and provided feedback on their work. This helped to identify areas where the staff needed additional training or support and allowed us to make adjustments to the training and support program as needed.

- availability: Our team was available 24/7 to provide support and assistance with any issues that might have arisen, this helped the staff to focus on their work and not worry about the infrastructure.